Table of Contents

Overview

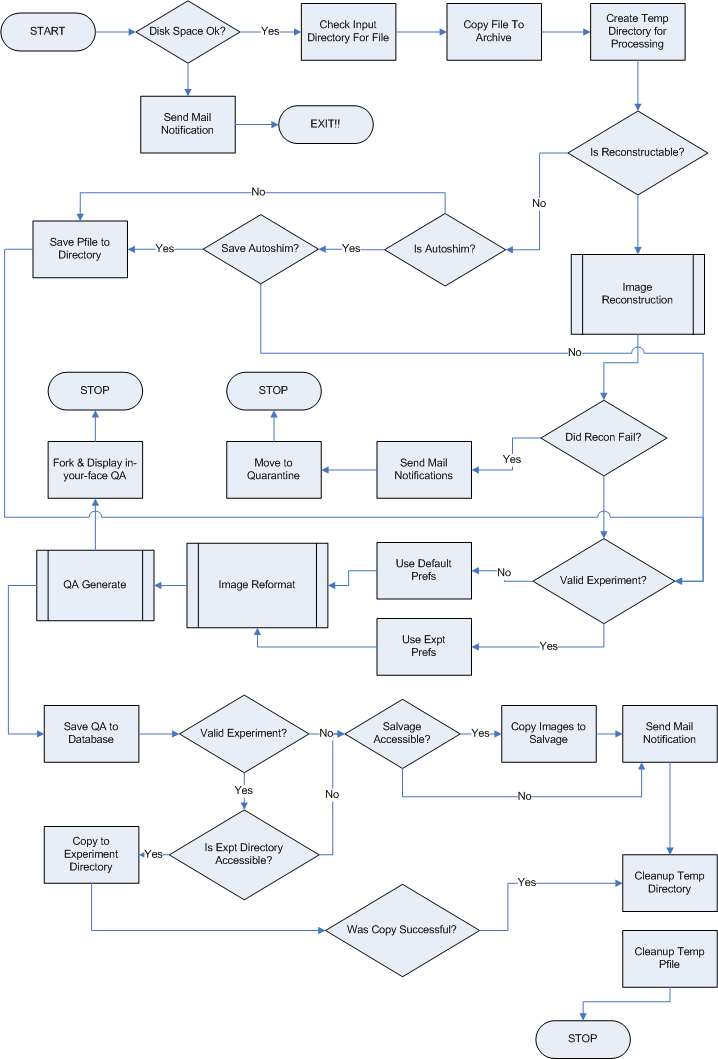

BIAC’s Processing Pipeline is a software system designed to automatically transfer data off of the scanner, run a series of automated processes on the dataset, and transfer the processed results to a directory where the user can get to it. This process depends on proper assignation of the dataset to a valid Experiment. Once assigned, data is processed according to Experiment based preferences, and the results are transferred to the appropriate Experiment directory, where users who have data access privileges can get to it.

Features

- End-users can email the data administrator certain Experiment Preferences that allow their data to come over in specific directory structures or have the images in different formats.

- All datasets come over with an XML descriptor that can be used to convert images to/from common image formats.

- All datasets also come over with a copy of the pfile header (labeled .pfh in the user's run directory) so that the XML descriptor can be recreated with the newest tools.

- Raw data files that are not reconstructable are copied over to the end-users storage server so that they can reconstruct the data themselves. This feature is primarily for our engineers who are developing their own reconstruction programs which are not yet ready for mass consumption.

Processing Daemon Flowchart

Frequently Asked Questions

Which of the data that is sent over to the processing daemon is backed up and how frequently?

All of the functional k-space data and DICOM files that are received are first copied to an archive directory on the local disk, which is then picked up by a nightly cron that copies the data to a tape backup partition monitored by our network and storage administrator. Our backup administrator regularly purges the files to tape and stores the tapes offsite.

Revision Info

- 1.4 addition of ALIVE file check to ensure storage servers are mounted properly.

Limitations and Suggested Improvements

- When the processing daemon encounters a dataset with an Invalid Experiment, the processing daemon should not start processing the dataset until the Experiment has been recognized. There is no way to apply Experiment Preferences to a resulting image set after the data has gone through the pipeline, so end-users are stuck with the data in a default BIAC recommended format, rather than the one the user had originally specified.

- If the storage servers were not mounted properly, results are transferred to the mountpoint instead of the server and potentially would take up space that is not recoverable until the files are deleted. There needs to be a better check before the copy occurs to validate that the mount exists, the disk is a remote disk, and the mount is accessible.