This is an old revision of the document!

Table of Contents

Processing Pipeline

Overview

BIAC's Processing Pipeline is a software system designed to automatically transfer data off of the scanner, run a series of automated processes on the dataset, and transfer the processed results to a directory where the user can get to it. This process depends on proper assignation of the dataset to a valid Experiment. Once assigned, data is processed according to Experiment based preferences, and the results are transferred to the appropriate Experiment directory, where users who have data access privileges can get to it.

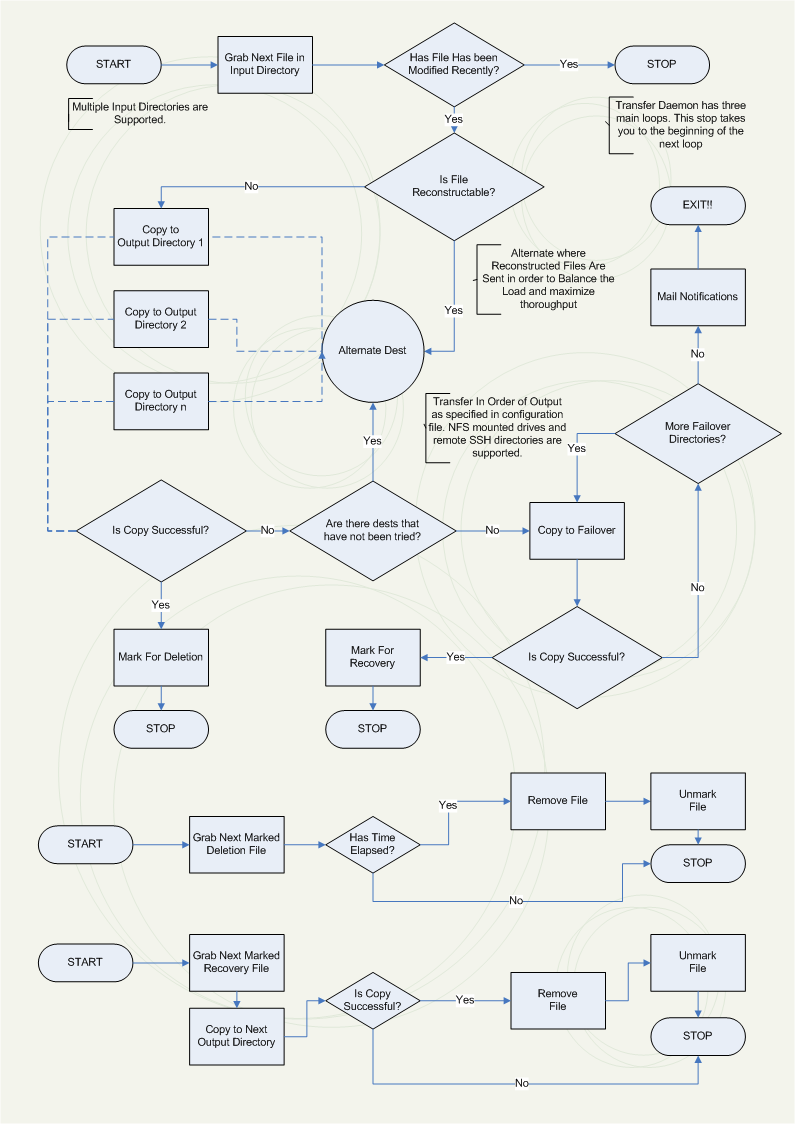

Transfer Daemon Overview

BIAC's transd software is designed to run on GE Signa scanner, monitoring directories for k-space data and transferring raw data files off of the scanner via NFS mounts and/or SCP connections.

Transfer Daemon 1.0 Flowchart

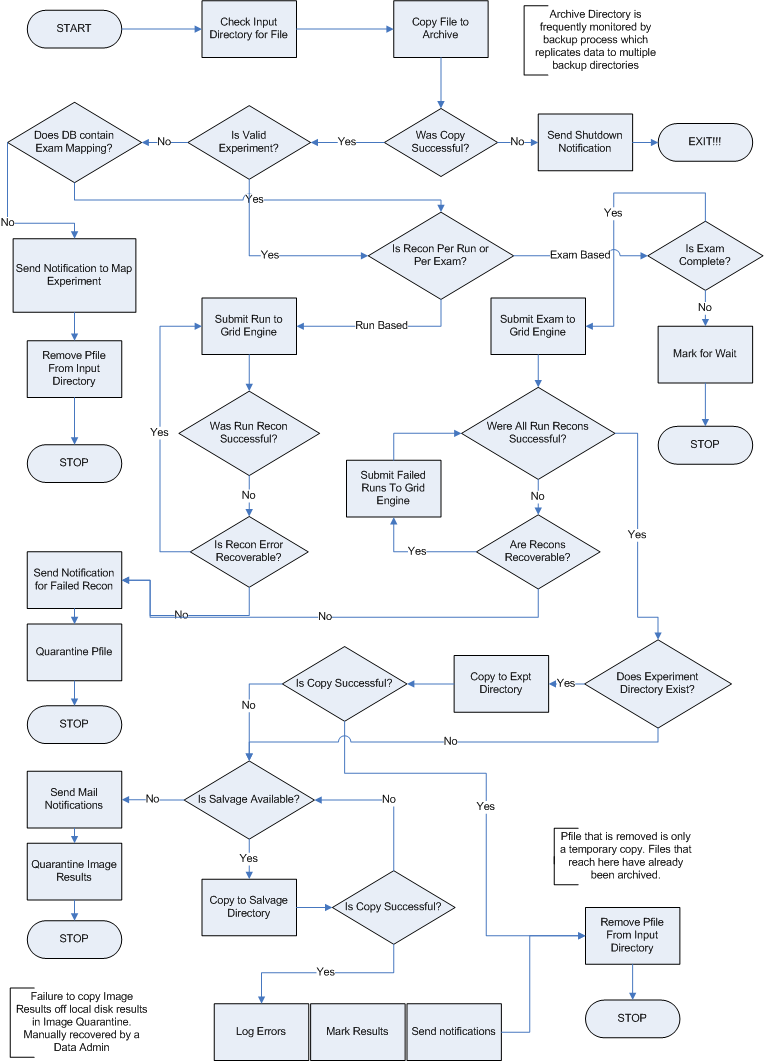

Processing Daemon 2.0 Beta Flowchart

Note: This primarily describes Functional Data Flow once the data has been transferred off of the scanner and onto a valid processing server. Anatomical Data Flow is similar, only the set of processes applied to the input data differ.

Backup Daemon Flowchart & Backup Admin Workflow

User Frequently Asked Questions

How does the pipeline assign a dataset to an Experiment?

For functional data, the Patient ID that is entered on the scanner console is primarily used for identification. Our software takes what is entered in on the console, strips out the punctuation, and lowercases all text before before comparing against a list of valid Experiments.

The tech entered the correct Experiment ID on the 4.0T console, but the dataset was not properly assigned to an Experiment. What happened?

If you entered a period in the Patient ID field, GE's software seems to have a bug in which the characters following the period are truncated before being inserted into the raw data header. As a result, the truncated Patient ID is unrecognized and the image results gets transferred to the Salvage directories. This only applies to functional data from the 4.0T.

How do I check the status of my data?

Under the 'Services' page on the BIAC website, click on 'Exam Tracker' and enter your 5 digit exam number. If the processing daemon has picked up and processed the files, you'll see the relevant log information.

I see errors on the Exam Tracker page and my data did not show up. What do I need to do?

If the error you see is 'Experiment Not Found', please submit a trouble ticket so our data administrators can know where the data was supposed to be sent. Our data admins will respond as soon as possible to the trouble ticket.

Looking for the Pipeline/Data Administration FAQ? Restricted. Request access by sending an email to Josh or Jimmy.

Processing Daemon History

- Processing Daemon v1.0 - Intended to process datasets per run on multiple processing servers as the datasets come over.

- Processing Daemon v1.7 - Manual intervention required for SENSE and b0 corrected data. Why? SENSE and b0 corrected recons expect the calibration run immediately preceding the run to be used. In the case that the transfer daemon transfers to mutiple nodes, there needs to be coordination among these nodes per exam, per run. We will wait until implementation of cluster processing daemon to devise a solution, as its easier to coordinate sequential run processing if there were only one datastream.

- Software ToDo List & Change Log - A constantly updated list of changes that need to be made to pipeline software in priority order and change log for systems.