Table of Contents

Region of Interest Based Eyetracking Analysis

These steps were created to gather eyetracking information based on specific regions of interest drawn on stimuli from the experimental task. The following assumes your task was image based, ( or images can be created to mimic the task ), there is XML behavioral output from the task, and your eyetracking was collected through Cigal or Viewpoint. The steps are run based (excluding drawing the ROIs).

Behavioral XML

The first step is to create behavioral XML files using the BXH/XCEDE tools. Please see the documentation for eprime2xml, showplay2xml, eventstable2xml to create the files.

Your resulting event nodes should include (at minimum):

- the image name

- onset

- duration

For example: <HTML>

<event type="image" units="sec"> <onset>425.894</onset> <duration>4</duration> <value name="imgName">12F_CA_C.bmp</value> <value name="regType">positive</value> <value name="trialType">FACE</value> <value name="response">1</value> <value name="RT">244</value> </event>

</HTML>

ROI Drawing

Next, ROIs should be drawn for each image that you wish to calculate eyetracking data. These ROIs should be saved in an XML based format. Chris Petty has written a matlab based function that will let you draw circles/ellipses or squares/rectangles on input images. The result is an XML which can be merged into your behavioral XML using the bxh tool bxh_eventmerge .

All of the following steps are based on this type of ROI.

- The first thing you need is a text file with all the image paths ( the full path to the image is recommended ).

- file_names.txt

/home/petty/eyetracking/sys_service/37M_CA_C.bmp /home/petty/eyetracking/sys_service/HAI_31.bmp

- Also, either add the path to the actual drawing function “imgROI_keyfcn”, or make a copy of it to your own directory ( the most current copy is \\Munin\Data\Programs\User_Scripts\petty\matlab\ )

- Screen resolution is the resolution of the display that the task was run on. The drawing function creates a representation based on that size.

Here is a sample script to run the drawing function:

- drawer_sample.m

%% add path to functions %addpath \\Munin\Data\Programs\User_Scripts\petty\matlab\ addpath ~/net/munin/data/Programs/User_Scripts/petty/matlab/ %% full path to your text file, which contains images ( also full path ) fileList = textread('file_names.txt','%s'); %read in list XML.rois = {}; %create empty XML outName = 'my_output.xml'; %name of output .xml with all ROIs ( file will overwrite on each save ) %screen resolution of task display xRes = 1024; yRes = 768; scrSize = [ xRes yRes ]; imgIDX = 0; %leave 0 to start at first image figUD = struct('imgIDX',imgIDX,'XML',XML); %loop that finds images imgs = {}; for file=1:length(fileList) [path name ext] = fileparts(fileList{file}); imgs{file} = struct('fname',[name ext],'fpath',fileList{file}); end %% this opens the drawing window and runs functions: do not edit %% fscreen = repmat(uint8(0),[scrSize(2),scrSize(1),3]); axes_h = axes; imshow(fscreen,'Parent',axes_h,'InitialMagnification',100,'Border','tight'); text(10,60,{'i - image','e - ellipse','r - rectangle','s - save','q - quit' },'HorizontalAlignment','left','BackgroundColor',[.5 .5 .5],'Color',[1 1 1]); figH = gcf; set(figH,'Toolbar','none','Resize','off'); set(figH,'UserData',figUD); hold on; % calls the functions set(figH,'KeyPressFcn','imgROI_keyfcn(gcbo,axes_h,imgs,scrSize,outName)');

Once the text file is create with full paths to each image, save/run your version of the above script. You should just need to edit the path, screen size, file names. The script will open a representation of the stimulis screen, press “i” to load the first image.

The current keyboard options are (these appear inside of the drawer) :

i - load the next image e - draw an ellipse/circle r - draw a rectangle/square s - save the XML q - quit

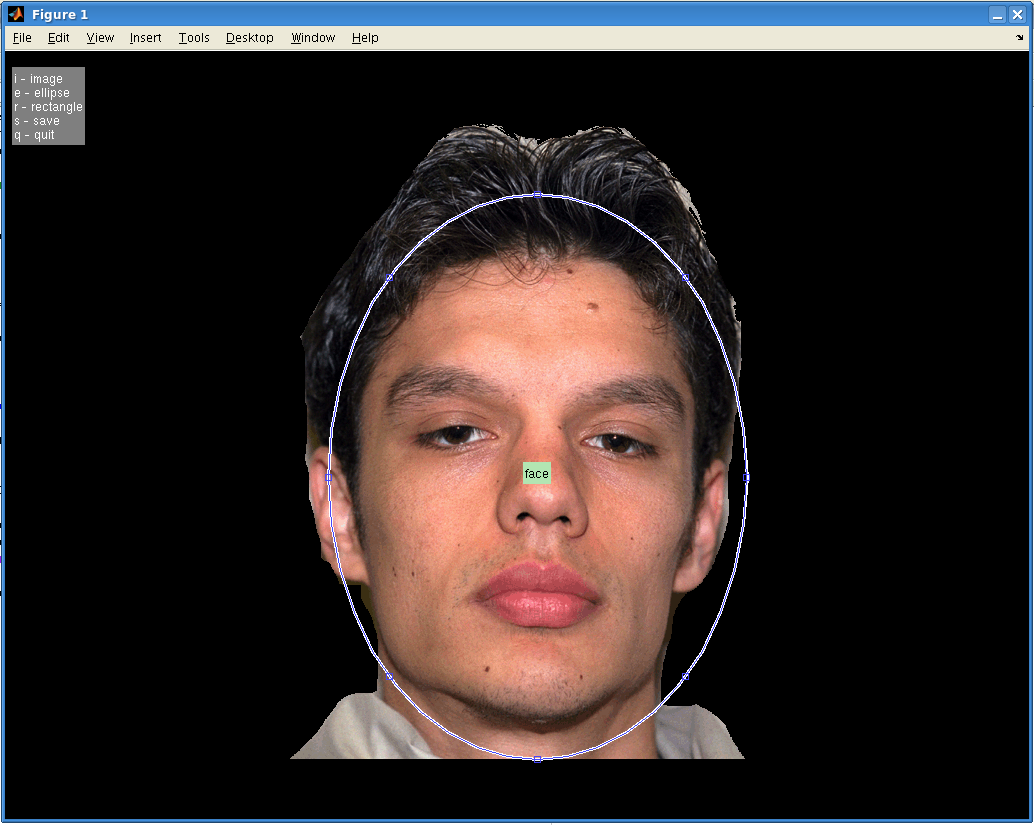

Once the image is loaded, press “e” or “r” to start your first ROI. There will be a small circle or square depending on the shape you selected. Drag the whole ROI to your desired location, then drag the sides or corners to form your desired shape. Once satisfied double-click on the ROI to name it. The name will appear inside the XML node associated with this ROI as well on the screen inside the associated ROI. You can draw multiple ROIs on each image, but be aware that if they overlap it will affect your final “hit” percentage.

Here's an example of a drawn/labled elliptical roi:

Once finished with the image, press “s” to save the current progress. This will add to the output file on each save, so feel free to save after each region. Just make sure you aren't initially overwritting a previous file! Press “i” to move on to the next image in your list, or “q” to quit if you are finished.

Here's an example of the output:

<HTML> <rois>

<roi type="block"> <name>chin</name> <origin>695 529</origin> <size>100 100</size> <units>pixels</units> <value name="imgName">oscar.jpg</value> </roi> <roi type="block"> <name>chest</name> <origin>447 387</origin> <size>100 100</size> <units>pixels</units> <value name="imgName">oscar.jpg</value> </roi>

</rois> </HTML>

Merge Behavioral XML and ROI XML Data

Now its necesary to merge the two sets of XML data together using “bxh_eventmerge”. This will insert your ROI data into the associated nodes from your behavioral XML. This must be run on a machine with the xcede tools. The inputs are the behavioral XML and the ROI XML from the previous step.

- merge_rois_into_behav.sh

#!/bin/sh bxh_eventmerge \ --grabexcludeset 'value[@name="imgName"]' \ --mergeeventpath '//*[local-name()="rois"]/*[local-name()="roi"]' \ 'value[@name="imgName"]' \ '.' \ BEHAVIORAL_run.xml \ my_drawn_ET_ROI.xml ## comments # grabexcludeset ignores any matches # mergeeventpath is the path to the XML nodes from the ROI.xml, the example above represents # <rois> # <roi/> # </rois> # 'value[@name="imgName"]' means that i am matching on name="imgName" in both behav and ET_ROI files (INPUTQUERY) # my GRABQUERY is just '.', means grab everything from ET_ROI.xml matches

The above script assumes that the behavioral XML and ROI XML have the images have the same name attribute:

<value name="imgName">oscar.jpg</value>

The script grabs everything except this node, and merges it into your behavioral xml. By default it writes to a new file with “merged-” appended to the input behavioral html.

Because the ROI XML has all <roi> nodes inside of the <rois> root, the “–mergeeventpath” flag from above is required.

For example, the following image has 2 associated ROIs that have been merged over:

<HTML>

<event type="image" units="sec">

<onset>7</onset>

<duration>4</duration>

<value name="imgName">HAI_31.bmp</value>

<value name="regType">look</value>

<value name="trialType">HAI</value>

<value name="response">2</value>

<value name="RT">1266</value>

<roi type="block">

<name>r1</name>

<origin>278 179</origin>

<endpt>252 398</endpt>

<units>pixels</units>

</roi>

<roi type="circle">

<name>c1</name>

<center>691 373</center>

<size>280 454</size>

<units>pixels</units>

</roi>

</event>

</HTML>

Preprocess Eyetracking Data

This will convert either Cigal or Viewpoint wks files into CSV ( comma-separated values ), with distinct headers for each column. If the data is in WKS normalized space, it needs to be converted into screen resolution using the same values used in the ROI drawing step. Also, if WKS data is not time-locked to the zero point of your task, a marker should be sent from your task to the eyetracking recording to mark the zero time.

Cigal files will already be in pixel resolution. Jim has also provided some tools to calibrate in cigal, then apply to the viewpoint software. If these methods have been used, the output will also be in pixel resolution. Otherwise, the valid resolution must be input into the preprocessing step.

Chris Petty has written a perl script (preprocess_eyetracking.pl) to handle the preprocessing with various input flags. Can be copied from \\Munin\Data\Programs\User_Scripts\petty, or run directly from cluster nodes.

USAGE: preprocess_eyetracking.pl [options] --input FILE --output FILENAME --type TYPE where [OPTIONS] may be the following: --debug enables debug mode --help displays this help --input eyetracking input data * --output name of output file * --type type of eyetracking data (raw,cigal) * --onsetMarker character than marks zero point in raw files --convertpix flag to convert to pixel dimensions --xres x_screen resolution (only needed if not in pixels) --yres y_screen resolution (only needed if not in pixels)

perl preprocess_eyetracking.pl --input subj_12345.wks --output subj_12345.preproc.wks --type raw --onsetMarker M --convertpix --xres 1024 --yres 768

- –input: is your eyetracking data ( either .wks or cigal eyepos file )

- –output: is the name to output your csv file ( .txt, .csv, .wks are all file )

- –type: represents “cigal” or “raw” ( different things happen depending on the type, “raw” represents viewpoint files )

if wks input, –onsetMarker is required if the data is not time-locked to your zero point. The time where –onsetMarker is found within the eyetracking data will be subtracted from all following input times, therefore zeroing the onsets.

if wks input is not in pixel dimensions as mentioned above, –convertpix with tell the script to convert the normalized space into pixel dimensions using the X and Y values ( –xres, –yres )

This output will be used in all future steps.

For example:

VP_Code,TotalTime,DeltaTime,X_Gaze,Y_Gaze,Region,PupilWidth,PupilAspect 12,0,M 10,0.000900000000001455,0.033671,391,231,0,0.0844,1.0000 10,0.034000000000006,0.033072,391,231,0,0.0813,0.9630 10,0.0674000000000063,0.033401,391,231,0,0.0781,0.9615 10,0.100700000000003,0.03333,391,231,0,0.0844,0.9630 10,0.134100000000004,0.033404,391,231,0,0.0781,0.9615 10,0.167400000000001,0.033327,403,229,0,0.0781,0.9615 10,0.200800000000001,0.033382,414,228,0,0.0813,1.0000 10,0.234200000000001,0.033359,414,228,0,0.0813,1.0000 10,0.267600000000002,0.033438,426,227,0,0.0813,1.0000 10,0.300899999999999,0.033295,437,226,0,0.0781,0.9615 10,0.334299999999999,0.033418,449,225,0,0.0781,0.9615 10,0.36760000000001,0.03331,449,225,0,0.0813,1.0000 10,0.40100000000001,0.033379,460,224,0,0.0781,0.9615 10,0.434400000000011,0.03336,472,209,0,0.0781,1.0000 10,0.467800000000011,0.033405,460,224,0,0.0781,0.9615

Calculate Eyetracking Hits

Hits are considered eyetracking points where gaze was within ROIs defined in step 1, during the duration of each image.

Chris has written a perl script (merge_eyetracking.pl), which takes the merged behavioral-ROI xml and the preprocessed eyetracking data from the last step to calculate the hits. The script can be copied from: \\Munin\Data\Programs\User_Scripts\petty, or run directly from cluster nodes.

USAGE: merge_eyetracking.pl [options] --eyetracking FILE --behavioral XML --output XML --type where [OPTIONS] may be the following: --debug # enables debug mode --help displays this help --eyetracking eyetracking input data * --behavioral behavioral XML input * --output name of output XML * --type type of eyetracking data (raw,cigal) *

- –eyetracking: preprocessed eyetracking CSV file

- –behavioral : behavioral XML ( with ROIs merged )

- –ouput : name of .XML to output file

- –type : cigal or raw ( same type from preprocessing step )

perl merge_eyetracking.pl --eyetracking subj_12345_run1.preproc.wks --behavioral merged-subj12345_run1.xml --output subj1234_run1_roiHit.xml --type raw

The processing script will add elements to the behavioral XML that represent hits and samples of valid eyetracking points from within your duration, this instance has 120 valid sample, with more than 50% inside the ROI:

<HTML>

<event type="image" units="sec">

<onset>34.5</onset>

<duration>4</duration>

<value name="imgName">37M_CA_C.bmp</value>

<value name="regType">positive</value>

<value name="trialType">FACE</value>

<value name="response">1</value>

<value name="RT">1026</value>

<roi type="circle">

<name>face</name>

<center>515 443</center>

<size>446 474</size>

<units>pixels</units>

<value name="hits">62</value>

<value name="hit_percent">51.67</value>

</roi>

<value name="samples">120</value>

</event>

</HTML>

At this point you could use these additional XML elements when extracting your onsets for FSL, etc by including some hit criteria in your xcede_extract_schedules.pl queries.

Visualizing Eyetracking Points in Matlab

Once eyetracking has been preprocessed, behavioral/ROI XML files have been created, you can visualize the points in matlab. The following script will run a function that will re-draw the screen and image, redraw the ROI if thats the function chosen and plot the eyetracking points. If ROIs are included, hits and misses will be differentiated.

Functions can be added to matlab path: \\Munin\Data\Programs\User_Scripts\petty\matlab\ or copied into a local location.

To run the plotter:

- et_visualization_sample.m

%% add biac tools if needed %run /usr/local/packages/MATLAB/BIAC/startup.m %%add my functions %addpath \\Munin\Data\Programs\User_Scripts\petty\matlab\ addpath /home/petty/net/munin/data/Programs/User_Scripts/petty/matlab/ %%XML with behavioral and ROIS XML_docname = '11436_run04_roiHits_pupil.xml'; %%preprocessed eyetracking data ET_filename = '11436_run4_pre.txt'; %%Path to your actual images, an array if multiple locations possible imgPath = {'//hill/data/Dichter/AutReg.01/Stimuli/AutReg.01/','//hill/data/Dichter/AutReg.01/Notes/SubjectPics/PS06'}; %%Image attribute name from behavioral XML [value=name"THIS"] imgAttr = 'imgName'; %%screen resolution of stimulus computer xRes = 1024; yRes = 768; scrSize = [ xRes yRes ]; %%turn on scanpath lines linesOn = 1; %%turn on pause withPause = 0; %1=on, this pauses between every point %%run the function %%this will show image, ROI, and ET points ROI_redraw(XML_docname,ET_filename,imgPath,imgAttr,scrSize,linesOn,withPause); %%this will only show image and ET points %ET_plot(XML_docname,ET_filename,imgPath,imgAttr,scrSize,linesOn,withPause);

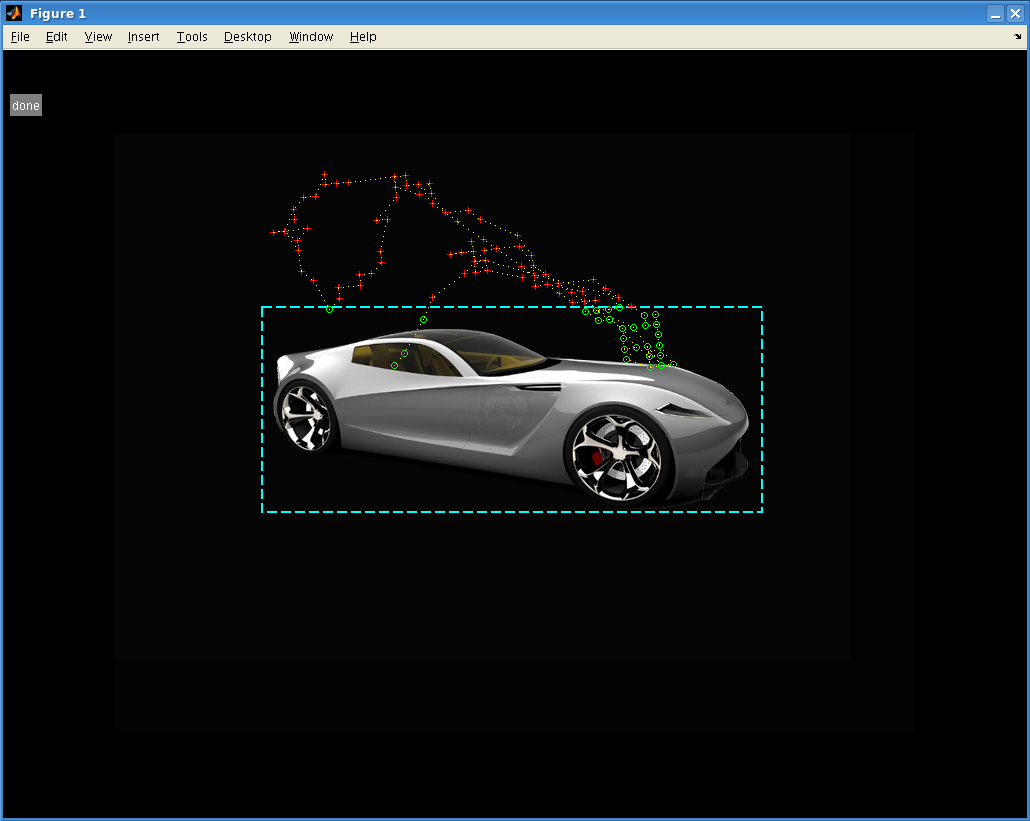

The output will look like this:

Hits are green circles inside the ROI, misses are red Xs outside. Since linesOn was turned on, there is a yellow gaze path from one point to the next. If withPause was turned on, there would be a slight pause between each point.

Currently, to move to the next image in the XML, just close the current figure. If you want to stop the redrawing, “ctrl + c” inside matlab to kill the loop. There are some updates planned to the controls.

Also, if you just have the behavioral XML and preprocessed ET data, you can run the function ET_plot instead. This will look at all images and ignore any ROIs, otherwise and ROI must be present for the image to get plotted.

PupilOmetry in Matlab

A matlab script based on pupilometry steps from Urry et al can be run to denoise pupil dilation data found in the preprocessed viewpoint WKS or cigal eyetracking files.

The result will be a pupilchange element added to your behavioral+ROI XML.

This example node has all previous steps run, pupil was smaller then the .5sec before image by 2.9% :

<HTML>

<event type="image" units="sec">

<onset>17.141</onset>

<duration>4</duration>

<value name="imgName">HAI_21.bmp</value>

<value name="regType">look</value>

<value name="trialType">HAI</value>

<value name="response">2</value>

<value name="RT">1212</value>

<roi type="block">

<name>object</name>

<origin>260 258</origin>

<size>500 205</size>

<units>pixels</units>

<value name="hits">10</value>

<value name="hit_percent">8.47</value>

</roi>

<value name="samples">118</value>

<value name="pupilChange">-0.029751</value>

</event>

</HTML>

The pupilOmetry function can be found: \\Munin\Data\Programs\Users_Scripts\petty\matlab\ or just added to your path.

This example script will run pupilOmetry on preprocessed eyetracking data and create the new XML with the added element:

- run_pupilOmetry_sample.m

%% add BIAC tools if needed %run /usr/local/packages/MATLAB/BIAC/startup.m %% add path to pupilOmetry function %addpath /home/petty/net/munin/data/Programs/User_Scripts/petty/matlab/ %addpath \\Munin\Data\Programs\User_Scripts\petty\matlab\ %%input XML ( merged behavioral + ROI ) xmlName = '/home/petty/eyetracking/autreg/11436_run04_roiHits.xml'; %%preprocessed eyetracking output ET_filename = '/home/petty/net/hill/data/Dichter/AutReg.01/Data/Eyetracking/subj1234_run1_pre.wks'; %%the attribute to match images with from the inputXML imgAttr = 'imgName'; %%output name of the XML with added pupilOmetry data. will be everything from input + pupilchange outName = '/home/petty/eyetracking/autreg/11436_cigal_pupil2.xml'; %%show the denoising plots plot = 0; %1 to show, 0 to skip %%run the actual function pupilOmetry(xmlName,ET_filename,imgAttr,outName,plot);

future

- If there are any suggestions for added features, or something just isn't right please contact Chris